Cloud environments such as AWS Lambda, Google Cloud Functions and Microsoft Azure Functions support serverless computing. Stateless functions declare their required compute resources which the runtime provisions when the function is invoked removing the need for dedicated servers, hence the term serverless. As dedicated servers are not required a serverless application scales elastically according to demand.

Functions are written in one of the languages supported by the runtime environment. Node.js, Python and JVM (Java Virtual Machine) based languages including Java and Scala are universal, C#, Go and others less so.

A function is triggered by the service to which it is are bound or by direct invocation.

The services to which a function can be bound are those provided and enabled by the runtime environment such blob storage services, database services and messaging services or a custom application service.

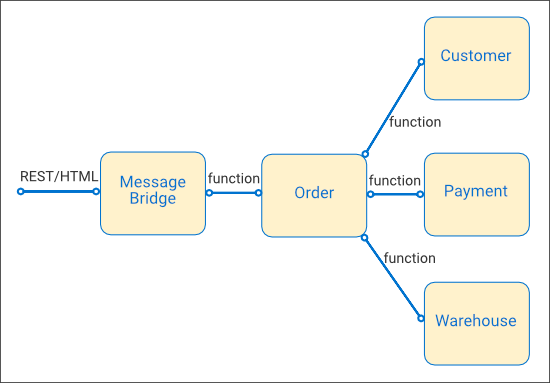

Direct invocation allows the composition of granular microservices, such as a stereotypical Order microservice composed of Customer, Payment and Warehouse microservices, where the operations for each are each implemented as functions which are invoked by the Order service. The Order function may itself be invoked directly or be bound to a RESTful gateway service.

The diagram below depicts a stereotypical Order service implemented with functions invoked by a RESTful gateway service. Compared to the same diagram for a container based deployment depicted in Microservice Musings : Overview only the means of invoking the microservices is changed, from "gRPC" to “function”.

The APIs used to invoke functions differ for each serverless runtime provider. To allow microservices to be deployed in any serverless or server based environment the mechanism used to invoke them must be decoupled using Adapters. Adapters are also required for any runtime services used for which an implementation neutral connector is not available. Example connectors include JDBC which provides access to SQL databases in Java and AMQP (Advanced Messaging and Queuing) which supports asynchronous messaging with connectors available in several languages.

In a serverless environment, each operation supported by a microservice is typically implemented as a function. For complex microservices with many operations maintaining a function for each is both tiresome and error prone. One solution is to automate their generation and deployment as part of the build process. Another is to use the Command pattern enabling a single function to handle all the operations for a microservice – the client sends the operation signature and its parameter values which the function handler receives and uses reflection to dynamically invoke the operation.

Understanding the Function Lifecycle

Functions run in a container provisioned by the serverless runtime. Container instances can be in one of three states - cold, warm or hot. Cold containers must be started to execute a function, warm containers are running and ready to execute a function, hot containers are running and executing a function. When dispatching work the runtime will always choose an available warm container over a cold one. The availability of warm containers is not guaranteed as they are disposable. Providers use different algorithms, but all will dispose of a warm container to release resources when they are contended.

This lifecycle enables cloud providers to deliver serverless computing at a lower cost than server based solutions, but has consequences. The latency of a function varies dramatically depending on the availability of warm containers. Invoking a function which triggers the start of a cold container can take several seconds as the operating system must be started and for functions implemented in languages that require a virtual machine this must be started too. The same invocation when a warm container is available will yield sub-second response times. This is particularly troublesome when concurrency levels rise due to increased transaction rates as additional cold containers may be required to service the load, which is the opposite of the desired behaviour.

There is much discussion around how to game the algorithms used be serverless providers to maintain warm containers to deliver the latencies they provide. Relying on this is for real time applications where the latencies delivered by warm containers are critical is not the route to take. At best, they only partially work. To scale elastically in response to increased demand cold containers will be required. And there is no guarantee that such gaming will continue to work as providers may change their algorithms at any time.

Serverless providers could resolve this by offering contracts that guarantee the availability of a defined number of warm containers. Charges would be higher as these would consume resources. With reasonable pricing, for real time latency critical applications this may be a cost worth paying as the alternative is to provision servers in sufficient number to satisfy peak demands which lack the elasticity to scale should their capacity be exceeded.

Conclusion

Serverless computing is a perfect fit for applications and services that do not require consistent low latency. Asynchronous processes are by design tolerant of latency, for example emailing the confirmation of an order. Batch processes, such as those that load, transform and analyse large datasets have execution times that dwarf any latency incurred when triggered.

For applications and services that require consistent low latencies as serverless computing is a poor choice as these latencies are not guaranteed. This may change in the future but given the current state of play an elastic server cluster remains the preferred solution.