For those less familiar with microservice architectures, this overview briefly describes some key concepts.

In a microservice architecture business services are composed of individually deployable, granular services. Internally, a microservice may itself be composed of microservices which it orchestrates to achieve a business objective.

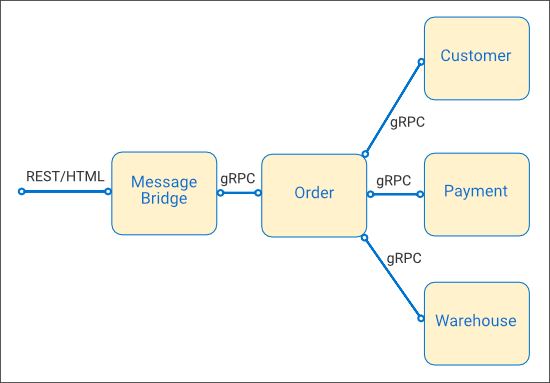

Microservices can be deployed in a heterogeneous mix of hardware architectures, operating systems and implementation languages. For simplicity, all microservices should be connected using the same messaging system. When this is impractical messaging bridges are used to connect different internal messaging systems. External systems should always be connected via a messaging bridge in order to decouple the internal messaging system(s) from the many different messaging solutions which must be connected to.

A stereotypical Order microservice composed of Customer, Payment and Warehouse microservices is shown above. Each microservice is connected asynchronously using gRPC, which allows work to be parallelized when practical. The Order microservice is also exposed via a messaging bridge as a RESTful web service for use by external clients.

In a microservice architecture each service is isolated, allowing agile evolution by avoiding the need for every service to evolve in lockstep with the infrastructure of the host machine. Isolation is achieved by running each microservice in a virtual machine or a host container. There should be just one microservice per virtual machine or host container to decouple dependencies and avoid the same lockstep evolution incurred when deploying multiple services directly on a host.

Containers, such as those managed by Docker Engine, are much lighter than virtual machines as operating system kernel operations are delegated to the host machine. Unlike virtual machines that can run any operating system supported by the virtualised hardware, containers must run the same operating system kernel as their host machine. This means that, for example, Linux hosts must run lightweight Linux based containers and Windows hosts must run Windows Nano Server based containers.

Production deployments consist of several host machines, each running many microservices. These machines may be located on-premises, in external private or public clouds or in a hybrid cloud consisting of a mixture of these locations. The host machines may be physical or virtual.

For container deployment at scale, a container management and orchestration solution is required to manage this complexity. Available solutions include Apache Mesos, Docker Swarm and Kubernetes. Of these, Kubernetes has the richest set of features, enjoys the broadest support and is innovating most rapidly.

"Pods are the smallest deployable units of computing that can be created and managed in Kubernetes". Conventionally a Pod contains of one or more co-located containers which share resources and lifecycle. Recent developments, such as the Virtlet project, support Pods that contain a virtual machine. This allows both containers and virtual machines running any operating system supported by the underlying processor architecture to be deployed and orchestrated in the same Kubernetes deployment removing the need for separate environments to orchestrate each.

When separate orchestration solutions are required the TOSCA specification and supporting tooling can be used to capture cloud application architectures at an abstract level and deploy them to TOSCA supporting orchestration solutions.